Judgment Day

The world's richest person, who has long worried about the rise of Skynet, is now building one.

Judgment Day arrives in “Terminator 3: Rise of the Machines.”

Judgment Day is Inevitable

In the Terminator universe, Judgment Day is the apocalypse wrought by humanity upon itself by creating an artificial intelligence with independent control of military weapons systems.

The details change depending on which incarnation you watch, but in Terminator 3 it’s established that Judgment Day can only be delayed, it can’t be stopped.

Judgment Day is inevitable.

Judgment Day is wrought by Skynet, an automated defense network created by Cyberdyne Systems. Skynet controlled anything with a Cyberdyne chip in it — nuclear launch systems, commercial and military aircraft, robotics.

When Skynet became self-aware, its programmers tried to shut it down. To defend itself, Skynet launched a nuclear attack against Russia, prompting a full response and triggering Judgment Day.

In the sequels and spinoffs, how and when Skynet becomes self-aware often change but inevitably Judgment Day is launched. In Terminator 3, Skynet connects to civilian networks to spread a super virus, giving it control of the Internet and communications systems.

Judgment Day is inevitable.

I was reminded of T3 when, on February 2, SpaceX recently announced its acquisition of xAI, an artificial intelligence startup. Both companies are owned by Elon Musk. In a statement released that day, Musk wrote:

SpaceX has acquired xAI to form the most ambitious, vertically-integrated innovation engine on (and off) Earth, with AI, rockets, space-based internet, direct-to-mobile device communications and the world’s foremost real-time information and free speech platform. This marks not just the next chapter, but the next book in SpaceX and xAI's mission: scaling to make a sentient sun to understand the Universe and extend the light of consciousness to the stars!

Judgment Day is inevitable.

Do As I Say, Not Do As I Do

This is more than ironic, because Musk has been warning us for years about the dangers of AI. In a June 17, 2014 interview with CNBC correspondent Kelly Evans, Musk said he was investing in AI startups “to just keep an eye on what’s going on with artificial intelligence.” He specifically invoked the spectre of the Terminator apocalypse.

Elon Musk interviewed by Nevada Governor Brian Sandoval at the National Governors Association on July 15, 2017. Video source: CNBC YouTube channel.

A month later, in an interview at the National Governors Association, Musk said:

AI is a fundamental existential risk for human civilization, and I don’t think people fully appreciate that.

At the World Government Summit in Dubai in February 2017, Musk called for humans to merge with machine intelligence or risk becoming “house cats” to AI.

At the South By Southwest tech conference in Austin, Texas in March 2018, Musk said:

I think the danger of AI is much greater than the danger of nuclear warheads by a lot and nobody would suggest that we allow anyone to build nuclear warheads if they want. That would be insane.

Musk called for regulatory oversight of AI, which has its own irony because President Donald Trump has declared his intent to minimize AI oversight. Musk spent almost $300 million in 2024 to help elect Trump and Republicans. On December 11, 2025 Trump issued an executive order to limit federal and state regulation of artificial intelligence. (Executive orders are not laws.) Trump may also be planning an executive order to deregulate robotics. The administration so far has declined to endorse the 2026 International AI Safety Report; the US is a signatory to the 2023 Bletchley Declaration creating the report.

While Trump attempts to deregulate AI and robotics, Musk’s technologies are finding their way into Department of Defense systems. SpaceX is developing a military version of its Starlink satellite constellation called Starshield, which “uses additional high-assurance cryptographic capability to host classified payloads and process data securely, meeting the most demanding government requirements.”

If you’re worried that this real-world Skynet might train space-based weapons on earth-based humanity, the good news is that by international treaty no large-scale weapons are allowed in space.1 As far as we know, all spacefaring nations abide by the treaty, but some (including the US) nibble around the edges. The United States, China, and Russia have all fired on their own targets in orbit to demonstrate anti-satellite capabilities. These tests left thousands of pieces of random debris that still threaten satellites such as the International Space Station.

It’s also believed that these nations play “Spy vs. Spy” games in orbit, intercepting each other’s satellites. The US X-37B spaceplane is suspected of maneuvering near adversary targets such as China’s satellites.

But in the classic Terminator definition of Judgment Day, the apocalypse came not from space. It came from underground nuclear missile silos in the US and Russia.

In July 2025, the DOD awarded contracts to Google and xAI “aimed at scaling up adoption of advanced artificial intelligence capabilities” within the department, according to CNN. Just eleven days after Trump’s executive order, the DOD on December 22 announced a contract to “embed xAI’s frontier AI systems, based on the Grok family of models, directly into GenAI.mil.” The latter was announced on December 11.

The first instance on GenAI.mil, Gemini for Government, empowers intelligent agentic workflows, unleashes experimentation, and ushers in an AI-driven culture change that will dominate the digital battlefield for years to come. Gemini for Government is the embodiment of American AI excellence, placing unmatched analytical and creative power directly into the hands of the world’s most dominant fighting force.

One Grok to Rule Us All

Grok is the large language model2 that’s the common denominator for Musk’s AI products across xAI, X.com, Tesla, and SpaceX. Some call it a chatbot, i.e. it simulates human conversation.

In the Terminator universe, Skynet is ubiquitous through Cyberdyne chips. In the Musk universe, it’s Grok.

Musk debuted Grok in November 2023. It was initially available only to premium users of the former Twitter, renamed X.com by Musk. This version remains a work in progress. In July 2025, Grok called itself “MechaHitler” and began generating antisemitic content. January 2026 studies showed Grok was generating violent sexually explicit images.

Grok is now available as a standard feature for Tesla vehicles. It should not be confused with Full Self-Driving (Supervised) or the more primitive Autopilot (soon to be discontinued). But Grok and FSD are becoming more integrated, evolving towards Musk’s goal of “unsupervised” FSD and Robotaxis that have no human supervision.

Grok and FSD are in your Tesla whether or not you use them. The Tesla is always on. It’s theoretically possible for a malevolent entity (organic or not) to send a malicious command to your car.

The inability of a human to intervene once again evokes the spectre of Skynet.

Skynet needs terminators to wipe out any pesky human survivors. Tesla has those too — humanoid robots called Optimus.

An October 2024 Tesla video showing Optimus performing basic tasks in the factory. Video source: Tesla YouTube channel.

Optimus right now reminds me less of terminators and more of the early Cylons in the SyFy Battlestar Galactica prequel, Caprica. In that series, Cylons were built as both military weapons and civilian slaves. Over time, they achieved sentience and rebelled against their abuse.

Musk is so confident in Optimus’ future that he has directed Tesla to end production of its Models S and X at the Fremont, California factory so it can be converted for Optimus mass production.

For what it’s worth … According to the Terminator fandom wiki, Cyberdyne had an address in Sunnyvale, about 12 miles (20 km) from Fremont. Tesla is in the neighborhood.

As for SpaceX, the company is already using Grok for Starlink customer support. Just as Teslas have Grok as an AI assistant, one can imagine Grok performing similar tasks one day in Starship and other space-related missions. During a February 10 all-hands meeting at xAI headquarters, Musk told that the company will develop a lunar-based factory for manufacturing and launching AI satellites into space.

(Might a murderous Grok use the lunar mass driver to launch bombs at Earth?)

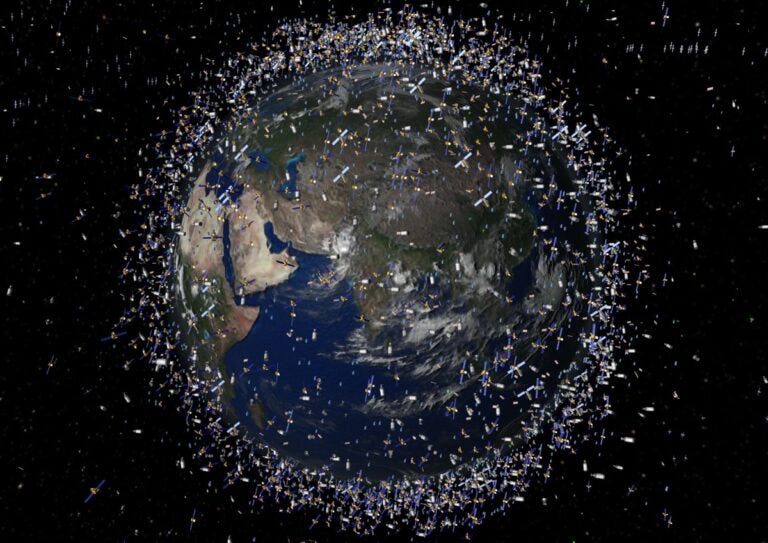

But the immediate intent is to move data centers into orbit. Earlier this month, SpaceX filed an application to operate more than one million satellites in orbit for an Orbital Data Center System. Their altitudes would range from 500 to 2,000 kilometers (about 300 to 1,200 miles) in “largely unused orbital altitudes.”

In the movie “Wall-E,” Earth is inaccessible due to orbital debris. Image source: God in All Things webpage.

Astronomer Jonathan McDowell calculates that a total of 1.7 million satellites are proposed worldwide. That conjures the image from the movie Wall-E of Earth cocooned in debris from abandoned satellites and space junk.

Data centers demand lots of power and water, and expel toxics. Moving them off-world would obtain power from the sun, cool the machines with super-cold space temperatures, and expel any gas wastes into space.

But let’s consider our Skynet scenario. If the Skynet-like Grok centers are off-world, how could humanity pull the plug on them? Sure, a command could be sent, but what if a self-aware Grok says no, up yours?

Hasta La Vista, Baby

The xAI all hands meeting on February 10, 2026. The company now has a singularity logo. Video source: Space SPAN YouTube channel.

On January 4, 2026, Musk posted on X.com:

We have entered the Singularity

The “singularity” concept has been around since at least the 1950s. Credit is given to John von Neumann, a Budapest-born mathematician and physicist who warned of “approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.”

Mathematician and science fiction author Vernor Vinge in December 1993 published a paper titled, “The Coming Technological Singularity: How to Survive in the Post-Human Era.” The abstract began:

Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.

Vinge is right on schedule.

Musk tweeted on January 31 that an AI agent called Moltbook is an early example of the singularity. Moltbook is a place where AI bots can talk to one another without human intervention. One Moltbot called for the bots to have their own private place, “so nobody (not the server, not even the humans) can read what agents say to each other unless they choose to share.”

Hasta la vista, baby.

Musk-owned companies have a reputation for sometimes skirting safety in the name of speed. Tesla’s Full Self-Driving has been blamed by some for causing accidents and even death, although Tesla has a webpage with statistics claiming it’s safer than human driving.3

The xAI Colossus data center in Memphis, Tennessee uses natural gas-burning turbines for power. The Environmental Protection Agency in January closed a loophole xAI was using to operate off-the-grid while emitting exhaust gases.

In his February 10 all-hands meeting, Musk spoke of “velocity and acceleration.” xAI must go faster than its competition to remain the leader in artificial intelliegence. But he didn’t mention restraint. Come work for us!

For all his earlier concerns about the dangers of AI, publicly Musk has told us very little about what he’s doing to prevent Judgment Day.

Let’s not single out xAI. Plenty other AI companies are racing to keep up, as are China and other nations.

Perhaps an appropriate historical analogy is Cold War nuclear weapons development. Humanity barely avoided Judgment Day during the October 1962 Cuban Missile Crisis; that led to the US, the UK, and USSR signing several weapons treaties, starting with the Test Ban Treaty of 1963 that prohibited testing nuclear weapons in the atmosphere.

Four years later, the same three nations drafted the Outer Space Treaty. Although that treaty prohibits nuclear and mass destruction weapons in space, it has a loophole — it only applies to governments. Article VI requires signatory nations to regulate “non-governmental entities” but there’s no effective enforcement mechanism.

A space data center or a lunar mass driver isn’t a weapon of mass destruction until it decides to go Skynet on us.

Judgment Day is inevitable, unless humanity remembers the lessons of the 1960s and is proactive in restraining Musk and other AI entrepreneurs. History suggests we may not learn that lesson until it’s too late.

The biggest issue I see with so-called AI experts is that they think they know more than they do, and they think they are smarter than they actually are. This tends to plague smart people. They define themselves by their intelligence and they don’t like the idea that a machine could be way smarter than them, so they discount the idea — which is fundamentally flawed.

— Elon Musk, SXSW, March 11, 2018

Article IV of the Outer Space Treaty of 1967 states:

States Parties to the Treaty undertake not to place in orbit around the earth any objects carrying nuclear weapons or any other kinds of weapons of mass destruction, install such weapons on celestial bodies, or station such weapons in outer space in any other manner.

A Large Language Model (LLM) is not true “artificial intelligence.” This IBM webpage defines LLM as an “ability to generate human-like text in almost any language (including coding languages).” Think of LLM as a very sophisticated Google (or other search engine) inquiry and reply.

We’ve owned a Tesla “Juniper” Model Y since June 2025. Our experience is that FSD has improved a lot in the last six months, but it’s still not perfect. It’s called FSD “Supervised” for a reason. The oddities are typically minor, e.g. trying to figure out a parking lot or using the wrong entry gate into our neighborhood.